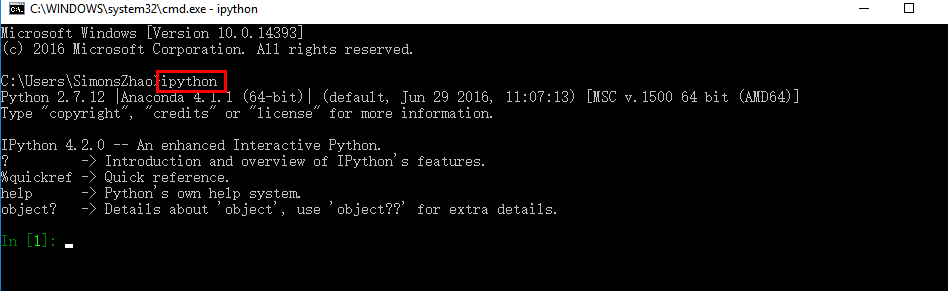

To test if your installation was successful, open Command Prompt, change to SPARK_HOME directory and type bin pyspark. or if you prefer pip, do: pip install pyspark. If you use conda, simply do: conda install pyspark.

#How to install pyspark in anaconda download

Download the spark tarball from the Spark website and untar it: tar zxvf spark-2.2.0-bin-hadoop2.7.tgz. Please refer to these articles Docker, JDK, CDH if the requisites are not met. Starting with Spark 2.2, it is now super easy to set up pyspark. Spark can be run using the built-in standalone cluster scheduler in the local mode. Steps to install Anaconda parcel on your CDH manager. This way, you will be able to download and use multiple Spark versions. Select the latest Spark release, a prebuilt package for Hadoop, and download it directly. You should be redirected to the web page. Wait for sometime to install, its a 200 mb package. Change the execution path for pyspark.Īlso, how do I download Pyspark? Install pySpark To install Spark, make sure you have Java 8 or higher installed on your computer. First you have to install jupytercontribnbextensions in Anaconda Prompt: pip install jupytercontribnbextensions Then: pip3 install jupyter-tabnine -user jupyter nbextension install -py jupytertabnine -user jupyter nbextension enable -py jupytertabnine -user jupyter serverextension enable -py jupytertabnine -user. Next there will be a pop up to install two packages where you should click APPLY.

Here I'll go through step-by-step to install pyspark on your laptop locally.

#How to install pyspark in anaconda code

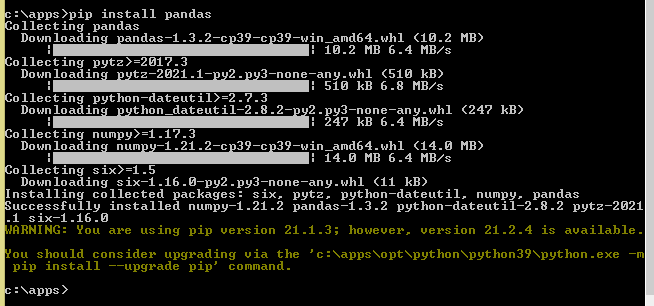

In the code below I install pyspark version 2.3. It is very important that the pyspark version you install matches with the version of spark that is running and you are planning to connect to. Then go to your computers Command Prompt. With the dependencies mentioned previously installed, head on to a python virtual environment of your choice and install PySpark as shown below. To install spark on your laptop the following three steps need to be executed. Ensure that you tick Add Python to path when installing Python. You should begin by installing Anaconda, which can be found here (select OS from the top):

0 kommentar(er)

0 kommentar(er)